Technology, like myriad man-made things, is a double-edged sword. On one hand it can be abused and used for less virtuous purposes. On the other, technology can be good for a great many things—enriching lives, propelling the human race forward, and tackling the world’s most pressing social and environmental challenges. To learn more, I recently caught up with Santanu Dutt, the head of technology and chief technology officer for South East Asia at Amazon Web Services (AWS) who shared about how technology can be used to make the world more inclusive, specifically for persons with disabilities (PWD).

According to the Social Welfare Department of Malaysia, as of 2018, there are over 450,000 persons with physical disabilities, with almost 17 per cent of these having some form of speech, hearing, or visual impairment.

The everyday life of these PWDs is distinctly different from mine and yours, and others who are abled. Everyday communication can be challenging, even though their loved ones may have learned to communicate with them through sign language, lip-reading, or Braille.

I’m sure you’ve come across someone who is differently-abled for you—whether a stranger in the street, a barista at Starbucks, or perhaps a member of the family. The struggle is real, but it can be better.

As technology advances, tools have been developed to help the disabled to cope better in life and the world.

In an AWS context, the company has various technologies and services that can be harnessed to build “Tech for Good” solutions.

For instance, Amazon Polly, that turns text into natural, lifelike speech. Polly’s Text-to-Speech (TTS) service uses advanced deep learning technologies to synthesize natural sounding human speech.

Using Amazon Lex, the same deep learning technologies that power Amazon Alexa, developers can build sophisticated, natural language chatbots.

Next, there is Amazon Transcribe that automatically converts speech to text, using a deep learning process called automatic speech recognition (ASR).

Encapsulating some of these technologies is Amazon Alexa, the familiar voice assistant that powers the Amazon Echo family of devices as well as other compatible devices.

How do all these Amazon services serve as building blocks to make life better?

Perhaps The Pollexy Project can give it some context. AWS cloud architect Troy Larson used a combination of Amazon Polly, Amazon Lex, and a Raspberri Pi to develop Pollexy—a special needs voice assistant to communicate with his 16-year old autistic son, Calvin.

Troy hopes these technologies can help Calvin transition into adulthood and potentially live semi-independently without the need of constant physical supervision.

“The idea behind Pollexy is to build a system that is aware of a person’s specific physical need and is built around the ability to prompt them to give them that quality of life; that sense of confidence where they can function almost independently. It’s invisible and it’s non-intrusive,” explained Troy.

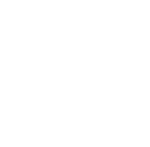

Another case study that Santanu shared was the deeplens-asl tool which enables Alexa to respond instantly to American Sign Language (ASL). AWS Deeplens becomes an interface for those who can speak and interact in the right languages—by translating ASL to written/spoken English in real-+time.

There are many more examples of real-world application of AWS technologies for the greater good. Cochlear, for instance, uses AWS for an automated solution to get replacement parts for hearing devices delivered to customers in 24 hours or less.

Then there’s Halodoc, a tele-consultation service with healthcare professionals and pharmaceuticals delivery service via its mobile app. AWS has helped Halodoc exponentially grow its user base from 100,000 to 600,000 within six months. It has also helped the company bring new app features to market, 30 per cent faster than with the previous setup.

I was curious to know if any of our e-hailing platforms use AWS technologies with specific applicated targeted at the differently-abled. The fact that an e-hailing player such as Grab employs over 500 differently-abled driver- and delivery-partners piqued my interest to imagine how a solution like sign2speak could be used to help customers and drivers/delivery-partners communicate better.

While Grab is on AWS, Santanu replied, there are currently no use cases for such a purpose.

AWS recently partnered WWF-Indonesia to accelerate efforts to save critically endangered orangutans in Indonesia.

Latest news

- “Unlimited” Data with Limits? Demystifying Telco Fair Usage Policies (FUP) in Malaysia

- Get Ready for 2024: The Rise of Generative AI in Cyberattacks

- Acer unveils the Predator Triton 16

- Refreshed Acer Swift Edge 16 Laptop set to shine with 3.2K OLED display

- Never miss a World Cup Qatar 2022 match with Astro Fibre

Subscribe to Vernonchan.com: Never miss a story, read stories on Feedly and Medium

Disclosure: Keep in mind that VERNONCHAN.COM may receive commissions when you click our links and make purchases. Clicking on these links cost you nothing and it helps to cover some of the costs for the upkeep of the site. While we may receive commissions, this does not impact our reviews, views and opinions which remain independent, fair, and balanced. Thank you for your support.